How Copilot in Microsoft Dynamics 365 and Power Platform delivers enterprise-ready AI built for security and privacy

Thomas Wisniewski

Microsoft Employee

Thomas Wisniewski

Microsoft Employee

Over the past few months, the world has been captivated by generative AI and applications like the new chat experience in Bing, which can generate original text responses from a simple prompt written in natural language. With the introduction of generative AI across Microsoft business applicationsincluding Microsoft Dynamics 365, Viva Sales, and Power Platforminteractions with AI across business roles and processes will become second nature. With Copilot, Microsoft Dynamics 365 and Power Platform introduce a new way to generate ideas and content drafts, and methods to access and organize information across the business.

Before your business starts using Copilot capabilities in Dynamics 365 and Power Platform, you may have questions about how it works, how it keeps your business data secure, and other important considerations. The answers to common questions below should help your organization get started.

What's the difference between ChatGPT and Copilot?

ChatGPT is a general-purpose large language model (LLM) trained by OpenAI on a massive dataset of text, designed to engage in human-like conversations and answer a wide range of questions on various topics. Copilot also uses an LLM; however, the enterprise-ready AI technology is prompted and optimized for your business processes, your business data, and your security and privacy requirements. For Dynamics 365 and Microsoft Power Platform users, Copilot suggests optional actions and content recommendations in context with the task at hand. A few ways Copilot for natural language generation is unique:

- The AI-generated responses are uniquely contextual and relevant to the task at hand informed by your business datawhether responding to an email from within Dynamics 365, deploying a low-code application that automates a specific manual process, or creating a targeted list of customer segments from your customer relationship management (CRM) system.

- Copilot uses both an LLM, like GPT, and your organization's business data to produce more accurate, relevant, and personalized results. In short, your business data stays within your tenancy and is used to improve context only for your scenario, and the LLM itself does not learn from your usage. More on how the system works is below.

- Powered by Microsoft Azure OpenAI Service, Copilot is designed from the ground up on a foundation of enterprise-grade security, compliance, and privacy.

Read on for more details about these topics.

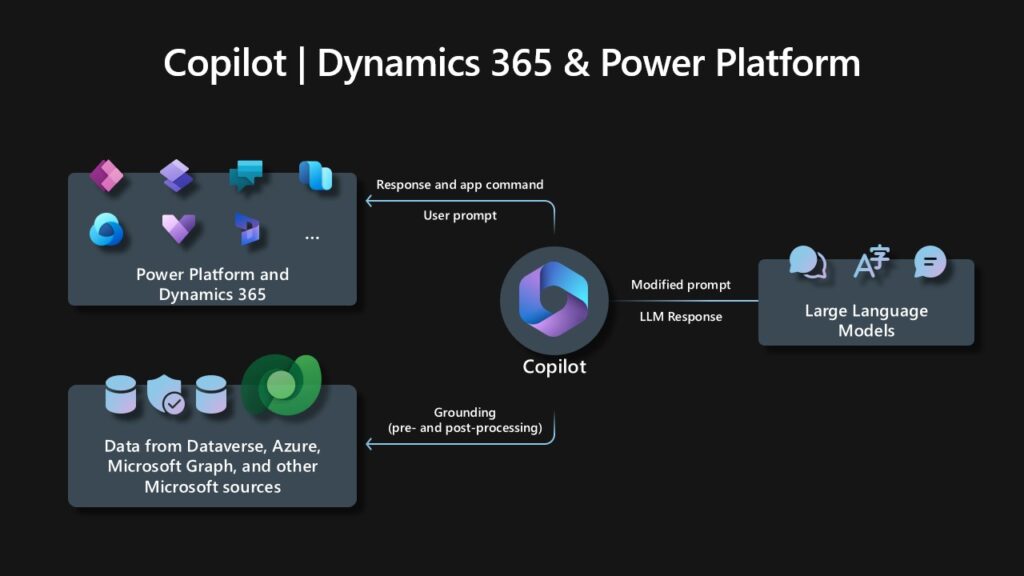

How does Copilot in Dynamics 365 and Power Platform work?

With Copilot, Dynamics 365 and Power Platform harness the power of foundation models coupled with proprietary Microsoft technologies applied to your business data:

- Search (using Bing and Microsoft Azure Cognitive Search): Brings domain-specific context to a Copilot prompt, enabling a response to integrate information from content like manuals, documents, or other data within the organization’s tenant. Currently, Microsoft Power Virtual Agent and Dynamics 365 Customer Service use this retrieval-augmented generation approach as pre-processing to calling an LLM.

- Microsoft applications like Dynamics 365, Viva Sales, and Microsoft Power Platform and the business data stored in Microsoft Dataverse.

- Microsoft Graph: Microsoft Graph API brings additional context from customer signals into the prompt, such as information from emails, chats, documents, meetings, and more.

Copilot requests an input prompt from a business user in an app, like Microsoft Dynamics 365 Sales or Microsoft Power Apps. Copilot then preprocesses the prompt through an approach called grounding, which improves the specificity of the prompt, so you get answers that are relevant and actionable to your specific task. It does this, in part, by making a call to Microsoft Graph and Dataverse and accessing the enterprise data that you consent and grant permissions to use for the retrieval of your business content and context. We also scope the grounding to documents and data which are visible to the authenticated user through role-based access controls. For instance, an intranet question about benefits would only return an answer based on documents relevant to the employee's role.

This retrieval of information is referred to as retrieval-augmented generation and allows Copilot to provide exactly the right type of information as input to an LLM, combining this user data with other inputs such as information retrieved from knowledge base articles to improve the prompt. Copilot takes the response from the LLM and post-processes it. This post-processing includes additional grounding calls to Microsoft Graph, responsible AI checks, security, compliance and privacy reviews, and command generation.

Finally, Copilot returns a recommended response to the user, and commands back to the apps where a human-in-the-loop can review and assess. Copilot iteratively processes and orchestrates these sophisticated services to produce results that are relevant to your business, accurate, and secure.

How does Copilot use your proprietary business data? Is it used to train AI models?

Copilot unlocks business value by connecting LLMs to your business datain a secure, compliant, privacy-preserving way.

Copilot has real-time access to both your content and context in Microsoft Graph and Dataverse. This means it generates answers anchored in your business contentyour documents, emails, calendar, chats, meetings, contacts, and other business dataand combines them with your working contextthe meeting you're in now, the email exchanges you've had on a topic, the chat conversations you had last weekto deliver accurate, relevant, contextual responses.

We, however, do not use customers' data to train LLMs. We believe the customers' data is their data, aligned to Microsoft's data privacy policy. AI-powered LLMs are trained on a large but limited corpus of databut prompts, responses, and data accessed through Microsoft Graph and Microsoft services are not used to train Dynamics 365 Copilot and Power Platform Copilot capabilities for use by other customers. Furthermore, the foundation models are not improved through your usage. This means your data is accessible only by authorized users within your organization unless you explicitly consent to other access or use.

Are Copilot responses always factual?

Responses produced with generative AI are not guaranteed to be 100 percent factual. While we continue to improve responses to fact-based inquiries, people should still use their judgement when reviewing outputs. Our copilots leave you in the driver’s seat, while providing useful drafts and summaries to help you achieve more.

Our teams are working to address issues such as misinformation and disinformation, content blocking, data safety and preventing the promotion of harmful or discriminatory content in line with our AI principles.

We also provide guidance within the user experience to reinforce the responsible use of AI-generated content and actions. To help guide users on how to use Copilot, as well as properly use suggested actions and content, we provide:

Instructive guidance and prompts. When using Copilot, informational elements instruct users how to responsibly use suggested content and actions, including prompts, to review and edit responses as needed prior to usage, as well as to manually check facts, data, and text for accuracy.

Cited sources. Copilot cites public sources when applicable so you're able to see links to the web content it references.

How does Copilot protect sensitive business information and data?

Microsoft is uniquely positioned to deliver enterprise-ready AI. Powered by Azure OpenAI Service, Copilot features built-in responsible AI and enterprise-grade Azure security.

Built on Microsoft's comprehensive approach to security, compliance, and privacy. Copilot is integrated into Microsoft services like Dynamics 365, Viva Sales, Microsoft Power Platform, and Microsoft 365, and automatically inherits all your company's valuable security, compliance, and privacy policies and processes. Two-factor authentication, compliance boundaries, privacy protections, and more make Copilot the AI solution you can trust.

Architected to protect tenant, group, and individual data. We know data leakage is a concern for customers. LLMs are not further trained on, or learn from, your tenant data or your prompts. Within your tenant, our time-tested permissions model provides safeguards and enterprise-grade security as seen in our Azure offerings. And on an individual level, Copilot presents only data you can access using the same technology that we've been using for years to secure customer data.

Designed to learn new skills. Copilot's foundation skills are a game changer for productivity and business processes. The capabilities allow you to create, summarize, analyze, collaborate, and automate using your specific business content and context. But it doesn't stop there. Copilot recommends actions for the user (for example, "create a time and expense application to enable employees to submit their time and expense reports"). And Copilot is designed to learn new skills. For example, with Viva Sales, Copilot can learn how to connect to CRM systems of record to pull customer datalike interaction and order historiesinto communications. As Copilot learns about new domains and processes, it will be able to perform even more sophisticated tasks and queries.

Will Copilot meet requirements for regulatory compliance mandates?

Copilot is offered within the Azure ecosystem and thus our compliance follows that of Azure. In addition, Copilot adheres to our commitment to responsible AI, which is described in our documented principles and summarized below. As regulation in the AI space evolves, Microsoft will continue to adapt and respond to fulfill future regulatory requirements in this space.

Next-generation AI across Microsoft business applications

With next-generation AI, interactions with AI across business roles and processes will become second nature.

Committed to responsible AI

Microsoft is committed to creating responsible AI by design. Our work is guided by a core set of principles: fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. We are helping our customers use our AI products responsibly, sharing our learnings, and building trust-based partnerships. For these new services, we provide our customers with information about the intended uses, capabilities, and limitations of our AI platform service, so they have the knowledge necessary to make responsible deployment choices.

Take a look at related content

- Introducing Microsoft Dynamics 365 Copilot, the world's first copilot in both CRM and ERP, that brings next-generation AI to every line of business

- Power Platform is leading a new era of AI-generated low-code app development

- Copilot in Viva Sales available as thousands of customers adopt next-generation AI in Dynamics 365 Copilot and Power Platform

The post How Copilot in Microsoft Dynamics 365 and Power Platform delivers enterprise-ready AI built for security and privacy appeared first on Microsoft Dynamics 365 Blog.

Like

Like Report

Report

*This post is locked for comments