Step by Step: integrate D365F&O with Microsoft Fabric Lakehouse

Views (423)

Hi friends: my today's topic is a much awaited topic on Integration between D365F&O with Azure Fabric Lakehouse. It's becoming a predominant topic of discussion whereby Microsoft is planning and targetting to bring everyting under one roof of Microsoft Fabric, which not only houses humoungous tools for Data extraction, ingestion, transformation, but also on Reporting and Data presentation.

Before we begin, let me give a sshort recap, on what is Microsoft Fabric? And how is Lakehouse even related here:

Microsoft Fabric is an end-to-end analytics and data platform designed for enterprises that require a unified solution. It encompasses data movement, processing, ingestion, transformation, real-time event routing, and report building. Microsoft Fabric is a data analytics platform that helps organizations manage, access, and act on data. It's designed to address all aspects of an organization's analytics needs, including all ingredients of data movement like: data processing, ingestion, and transformation, as well as real-time analytics to allow users to explore, analyze, and act on large volumes of streaming data in near-real time.

Not to mention enough rooms for a number of services from Data Science like Data Lake and ingestion.

Just before we jump to our next topic, let me introduce you to Lakehouse (just in case, you've not encountered them already): which is a very powerful data architecture platform for storing, managing, and analyzing structured and unstructured data in a single location.

This article is about setting up a seamless integration between D365F&O to Lakehouse. Here are the steps to follow:

Step 1:

Let us start with creating a Azure SQL DB, with the following as basic settings:

Under Networking Tab, select the following settings to allow the current user to access the databse server and current client IP address:

Click on Review + create >> Create to start the creation of the databsase and its deployment in Azure.

Step2;

Once the deployment is over and the resource is ready, come to Settings Connection Strings and copy the SQL Authentication connection string:

Let us now come to Dynamics365F&O >> Data management >> Configure entity export to Database:

Paste the connection string which you copied earlier >> don't forget to change the admin password which you created for Azure SQL Server:

And click on Publish. The following Screen of the Azure SQL connect will open, which you've already configured:

Click on Publish to enable the Vendor Group entity to be extracted as a job, in Azure SQL.

And yes, this will schedule it as a job:

And yes, this will schedule it as a job:

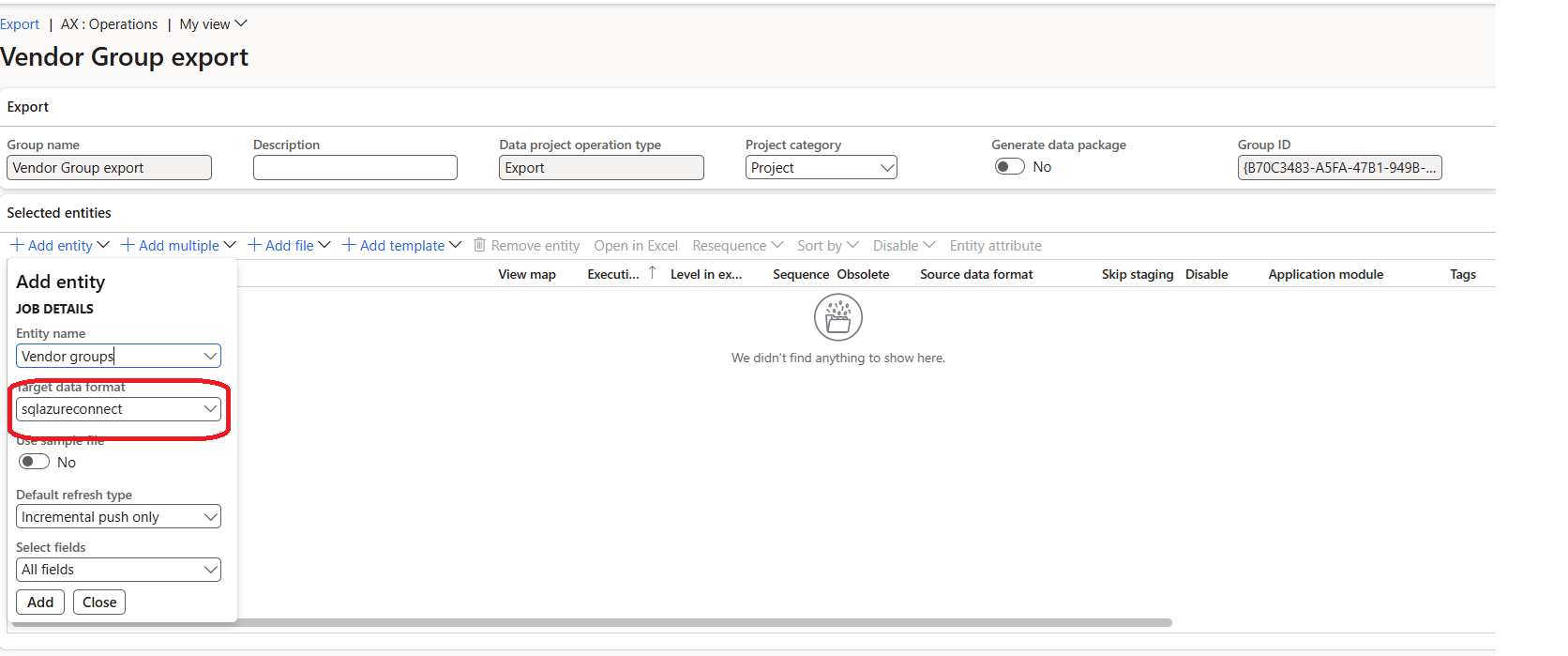

Next create an export definition Group for Vendor group and add entity: Vendor group, and target data format as SQLAzureConnect:

Schedule this as a Batch export.

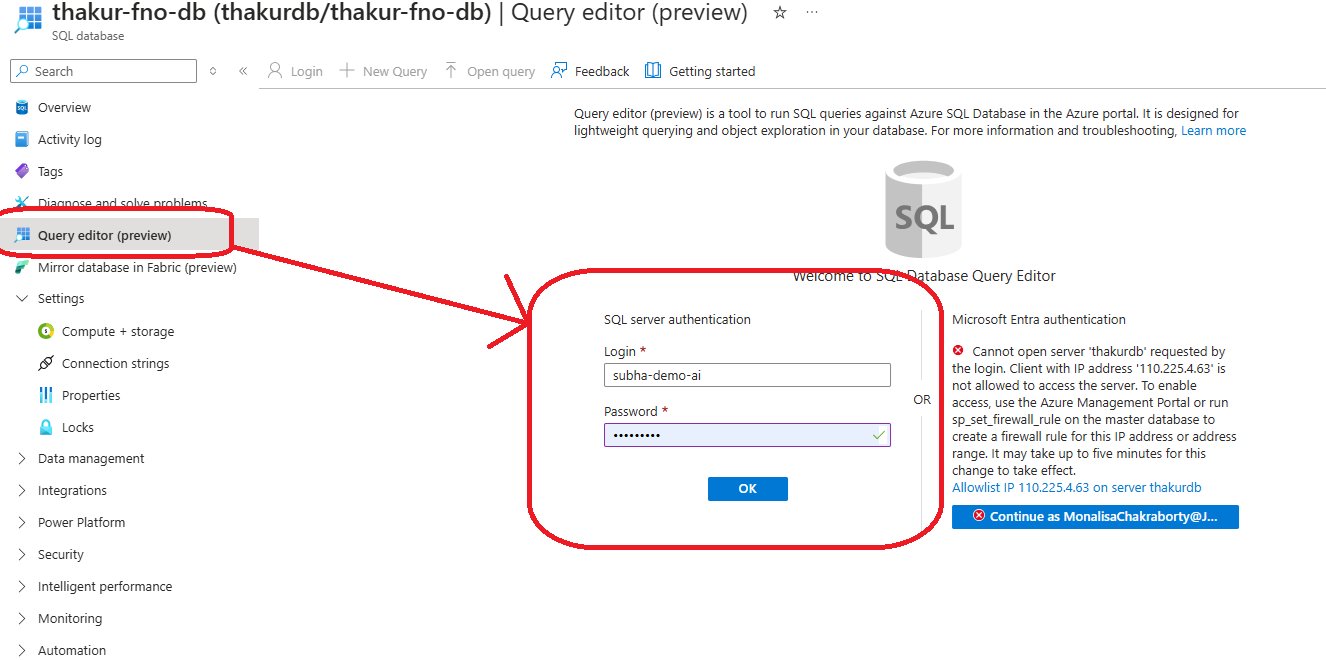

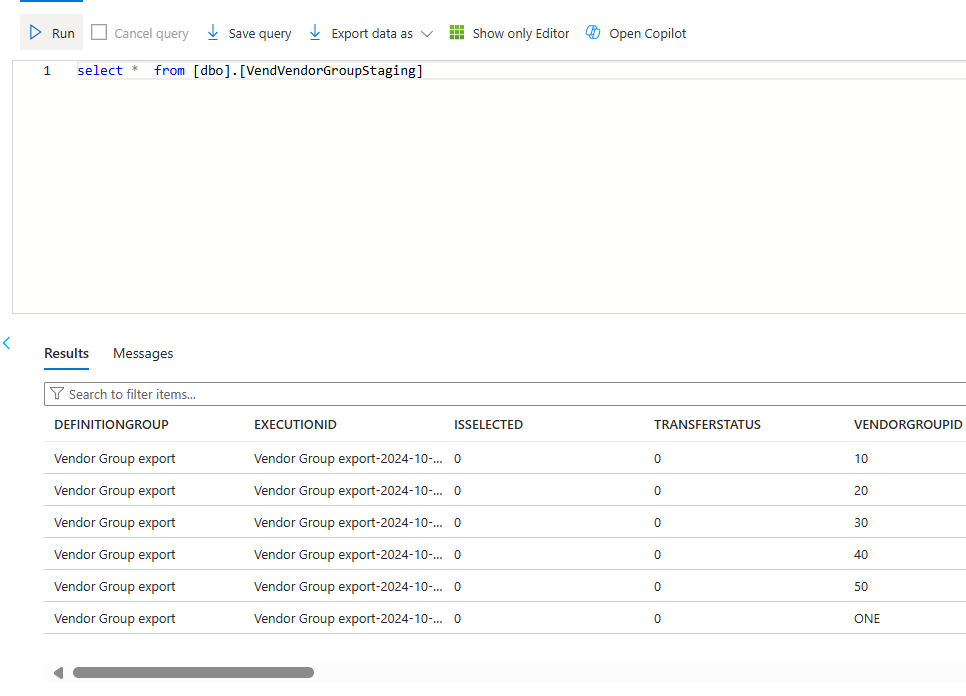

All ready -- wait for few moments, and then you connect to Azure SQL:

Going to Microsoft Fabric >> Workspaces >> My workspace (alternately you can create new workspace) >> Lakehouse >> Get Data >> New Data Pipeline:

From the following propmpt, select View More:

Coming back to Azure SQL DB >> Overview >> You will get trhe necessary information to fill up the following screen:

Please note: I am selecting Basic Authentication for my Demo purpose, but you should choose Service principal as Best preactise.

Select Next.

Select the Vendor group Staging Table which you have got data populated in Azure SQL. Click Next >> select Table >> select Load to New table Option:

Click Save + Run >> to start the data fetching.

The pipeline will initially show being in Queued:

And then in sometime it will show as Succeeded.

And yes: as expected -- the Vend group table will be shown as a new table in the Lakehouse:

Addtionally you can also set this up as a scheduler to bring in data in a certain frequency (as a batch), so that in D365FO end the entity is exported incrementally, the same is going to be replicated in Fabric, accordingly.

Whew, with that I am concluding this discussion. Wishing you an awesome festival of light: Happy Diwali.

See you soon with another blog on Data engineering. Much Love and Namaste 💓💓💓

Like

Like Report

Report

*This post is locked for comments