Processing Azure Blob Storage events with Azure Event Grid, Logic Apps and Azure Functions (real case)

Stefano Demiliani

Stefano Demiliani

Problem raised by a customer: “We are using Azure Blob Storage with a Dynamics 365 Business Central solution. The solution permits to create and delete files on a blob storage container directly from Business Central. We would like to have a process that, when a file is deleted from the blob storage, it’s automatically copied into a backup storage and a notification is sent to the IT department“.

Partner solution: Codeunit with the following procedures:

- Procedure to handle the upload of files into a blob storage storage

- Procedure to handle the deletion of blobs from a storage

- Procedure to copy a blob from storage A to storage B before deletion

- Procedure to send emails to a mail address

- …

Main issues:

- reliability of the entire process (some errors can break the backup process)

- performances of the transaction

I think this is an example of a solution that “normally works” (and do you want a solution that usually works?) but that I cannot define “cloud-optimized” and “well-architected”. Why? Mainly because:

- You should not handle all processes in AL code (I will never stop to say that!)

- In a cloud world you can do it better

How can we rearchitect this workflow in order to have a more powerful and reliable process in the cloud? Just by using the right services instead of doing all inside the ERP code!

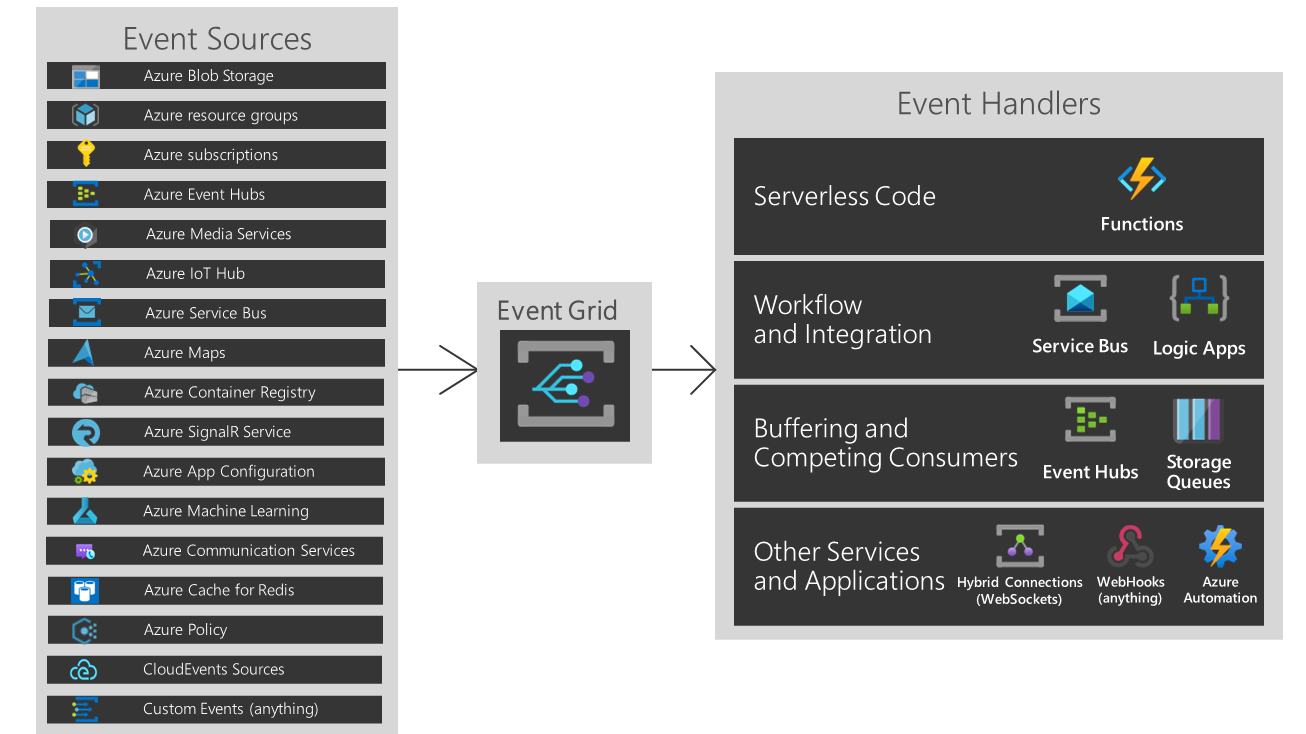

You can react in a reliable way to Azure Storage events by using Azure Event Grid. Event Grid is a highly scalable, serverless event broker that you can use to integrate applications using events (event-driven architecture). Events are delivered by Event Grid to subscriber destinations such as applications, Azure services, or any endpoint to which Event Grid has network access. The source of those events can be other applications, SaaS services and Azure services. With Event Grid you can use events to communicate occurrences in system state changes to other applications or services and you can use filters to route specific events to different endpoints, multicast to multiple endpoints, and make sure your events are reliably delivered.

In a modern SaaS architecture, the previously described process can be implemented as in the following diagram:

Here:

- A blob storage (here called main_storage) sends a deletion event to Azure Event Grid every time you delete a blob.

- The Azure Event Grid triggers an Azure Logic app instance (event handler).

- The Logic App sends an email to the IT department with the details of the deleted file.

- The Logic App triggers an Azure Function passing the details of the blob deletion event.

- The Azure Function handles the processing of the deleted blob, like:

- Logging the deletion details on Azure Application Insights

- Archiving a copy of the deleted file into another storage account (here called backup_storage).

How can you configure the architecture?

The main part here is to configure the Event Grid source and destination. before doing that, please remember that in order to have events correctly triggered in a subscription, you need to have the Event Grid provider enabled. This in an important thing to remember because if it’s not enabled, your resource events are never triggered (and you loose hours on checking why…).

To do that, select your subscription, then click on Resource Providers and search for “Microsoft.EventGrid“. If the provider is not registered, click on Register to enable it:

This is mandatory in order to have events correctly routed.

Now, let’s configure an event subscriber by creating a new blank Azure Logic App (Consumption plan here, recommended if you plan to not have tons of these events per day). In the Triggers definition, search for Azure Event Grid and select the When a resource event occurs trigger:

We can configure our event subscription trigger as follows:

Here:

- Resource Type must be the type of Azure resource to subscribe to events, in this case Microsoft.Storage.StorageAccounts.

- Resource Name is the name of your storage account.

- In the Event Type field, add all the events that you want to subscribe (in this scenario we want to subscribe to Microsoft.Storage.BlobDeleted event).

This event will return a JSON body that contains informations about the deleted blob. The body is something like the following:

{

"topic": "/subscriptions/YOURSUBSCRIPTIONID/resourceGroups/d365bcdemo/providers/Microsoft.Storage/storageAccounts/d365bcdemostorage",

"subject": "/blobServices/default/containers/main_storage/blobs/MyFile.jpg",

"eventType": "Microsoft.Storage.BlobDeleted",

"id": "11856a49-a01e-0041-568d-c61731068475",

"data": {

"api": "DeleteBlob",

"requestId": "11856a49-a01e-0041-568d-c61731000000",

"eTag": "0x8DA94A4F06C103D",

"contentType": "image/jpeg",

"contentLength": 71982,

"blobType": "BlockBlob",

"url": "https://d365bcdemostorage.blob.core.windows.net/imagecontainer/MyFile.jpg",

"sequencer": "000000000000000000000000000021AF0000000001accbc7",

"storageDiagnostics": {

"batchId": "87a5c50f-4006-0076-008d-c6c59d000000"

}

},

"dataVersion": "",

"metadataVersion": "1",

"eventTime": "2022-09-12T09:55:35.5507773Z"

}

To send an email with the details of the raised event, you can add an Outlook action like the following (select the output fields from the previous action):

What happens when you save the Logic App?

If you select your storage account and click on Events, you can see that an event subscription is added:

The created event subscription is our Logic App registered as a webhook. In this way, Azure Event Grid calls an HTTPS endpoint listened to by our Logic App when the deletion event is fired in the blob storage.

You can test the event subscription by uploading and downloading a file from the blob storage. You can see that an event is triggered:

If you go to the event subscriber (our Azure Logic App) you can see that it’s correclty triggered and that the output of the trigger contains the JSON body with the details of the deleted blob:

To handle this deletion, we pass this JSON body to an Azure Function (HttpTrigger) that parses it and:

- retrieves the operation from the eventType field (BlobDeleted or other events that you want to handle);

- retrieves the deleted file and container name from the url field

- moves the deleted file to a new storage account (backup_storage)

- writes a custom log to Application Insights

- …

The Azure Function has a method for moving the blob from the original destination to the backup storage before deletion. Pseudo code of this method is the following:

private static async Task CopySourceBlobToDestination(CloudBlockBlob sourceBlob,CloudBlockBlob destinationBlob, ILogger log)

{

log.LogInformation("Blob copy started...");

try{

using (var stream = await sourceBlob.OpenReadAsync())

{

await destinationBlob.UploadFromStreamAsync(stream);

}

log.LogInformation("Blob copy completed successfully.");

}

catch(Exception ex){

log.LogError("Blob copy error: " + ex.Message);

}

finally{

log.LogInformation("CopySourceBlobToDestination completed");

}

}

The complete Azure Function code is available on my Github here.

In this way we’ve totally handled the process in a fully serverless way and without using the ERP (this is not an ERP task, isn’t it?).

With the event grid subscription you can also do more, like for example applying filtering on subjects. Imagine for example that I want to handle this process only if a PDF file is deleted from the storage account (so, process triggered if a .pdf file is deleted and not triggered if a .jpg file is deleted).

To do that, select your storage account, click on Events and then select your registered event subscription (in this case the Logic App webhook). In the Filters tab of the event subscription, you can specify something like the following:

And you can also do more (like aggregation of messages and more), but that’s another story.

As you can see, a business process previously handled with AL code with lots of reliability problems, now it’s totally handled by the Azure platform with serverless processing. My “Leave the ERP doing the ERP” mantra is always valid…

This was originally posted here.

Like

Like Report

Report

*This post is locked for comments