Hi,

we are migrating from Data Export Service (DES) to Azure Synapse Link, we were able to export data from Dataverse to Data Lake

but we are facing issues with Data Factory pipeline configuration which export data from Data Lake to Azure SQL

we are following this doc

https://docs.microsoft.com/en-us/power-apps/maker/data-platform/azure-synapse-link-pipelines#use-the-solution-template-in-azure-data-factory

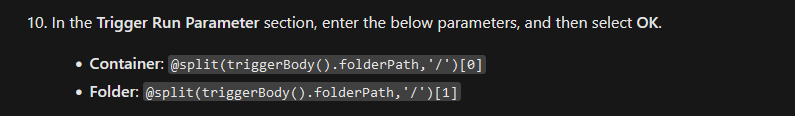

in section 10 it has parameters for Container and Folder when configuring the Trigger

when we run the pipeline we got this error:

Operation on target LookupModelJson failed: ErrorCode=AdlsGen2OperationFailed,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=ADLS Gen2 operation failed for: 'filesystem' does not match expected pattern '^[$a-z0-9](?!.*--)[-a-z0-9]{1,61}[a-z0-9]$'.. Account: 'dverep00test02'. FileSystem: '@split(triggerBody().folderPath,''. Path: '')[0]/@split(triggerBody().folderPath,'/')[1]/model.json'..,Source=Microsoft.DataTransfer.ClientLibrary,''Type=Microsoft.Rest.ValidationException,Message='filesystem' does not match expected pattern '^[$a-z0-9](?!.*--)[-a-z0-9]{1,61}[a-z0-9]$'.,Source=Microsoft.DataTransfer.ClientLibrary,'

I don't know how to validate what triggerBody().folderPath has or return, I don't see any console or something to run this and get the value to understand why @split(triggerBody().folderPath,'/')[0] return of split function does not match the pattern

Blob Container has data (csv) and model.json (with data) in the root of the container

we followed instructions 100% and recreated the pipeline several times, didn't help

I did not find any info about this error in the net

Maybe anyone had the same problem?

I would appreciate any help.

Thanks.