Data Integration-Dynamics 365 Commerce to Dynamics 365 Customer Insights - Data

Views (445)

Audience - Solution architect, Developer

Use case example - Segment based Journeys

Use case example - Segment based Journeys

Introduction and Context

This blog post is a part of the series that began with the 1st blog post Better Together: Dynamics 365 Commerce & Customer Insights, and is focusing on the data integration from Dynamics 365 Commerce to Dynamics 365 Customer Insights - Data.

Integrating Dynamics 365 Commerce with Customer Insights offers retailers a powerful way to enhance their customer engagement and operational efficiency. By unifying customer data from various touchpoints, retailers can gain a comprehensive view of each customer, enabling them to deliver personalized experiences and targeted marketing campaigns. This integration allows store associates to access valuable insights into customer preferences and behaviors, helping them make informed recommendations and improve customer satisfaction. Additionally, it supports advanced clienteling, which can boost customer loyalty and drive sales by ensuring that interactions are relevant and timely. Overall, this integration helps retailers streamline processes, reduce customer churn, and maximize revenue opportunities.

Dynamics 365 Commerce is a cloud-based platform that enables retailers to deliver personalized, seamless, and omnichannel shopping experiences across various channels and touchpoints.

Part of the Dynamics 365 Customer Insights product, Customer Insights - Data (CI-D) is Microsoft’s AI-powered Customer Data Platform (CDP) that helps organizations unify, enrich, and generate insights from customer data from multiple sources and gain actionable insights to drive customer engagement and loyalty. Customer Insights - Journeys (CI-J) is the associated journey orchestration module that can leverage the insights from the CDP to inform personalized marketing campaigns.

To leverage the full potential of both solutions, it is essential to start by bringing the data from Dynamics 365 Commerce to Dynamics 365 Customer Insights - Data as a foundation to unlock use cases and scenarios we will detail in the rest of this series.

For this article, we are looking at Dynamics 365 Commerce as one of the data sources that will feed Dynamics 365 Customer Insights - Data and how to achieve this integration.

Due to the distinct platforms Dynamics 365 Commerce and Dynamics 365 Customer Insights operate on – and the differences between their respective data models, schemas, and database structures – the integration is not automatic and needs to be carefully planned and executed.

Finally, with large amounts of data, there is a need for effective and scalable approaches to manage this data integration, which includes ETL/ELT (Extract, Load, Transform) methods and synchronization processes as detailed in this high-level architecture schema:

Fig. 1: end-to-end batch data integration flow from Dynamics 365 Commerce to Dynamics 365 Customer Insights. Each number in the schema corresponds to a section of the current article.

1. Exporting Dynamics 365 Commerce Data to a Lake

The first step of the process is to get the Dynamics 365 Commerce data into a Lake where it can be prepared to the right level (see section 2).

Several options and variations exist to accomplish this, and you are maybe already leveraging some of those for BI & Analytics scenarios, so you might be able to benefit from those pre-existing investments.

In the context of this blog post, we will assume a blank state, and we will focus on two reference architectures, aligned with the products’ strategy and roadmap.

For instance, we will not cover the “Export to Data Lake” capability of Dynamics 365 Commerce as it is deprecating November 1, 2024.

These two reference architectures for data integration are respectively geared toward SaaS and PaaS, are equally valid, and are recommended as being highly scalable while offering you control over their performance.

Choosing one or the other will mainly depend on factors such as your internal strategy for SaaS versus PaaS, the pre-existing investments in the corresponding technologies and the associated teams’ expertise, as well as the total cost of ownership.

| N.B.: when exporting to a Lake, you can choose both standard and custom Finance and Operations entities and tables. There are some limitations though to be considered with D365 Commerce tables and entities as detailed in tables limitations and entities known limitation. For example, change tracking cannot be enabled for all Finance and Operations entities. If the chosen entity is unavailable because of the change tracking limitation, you might be able to choose the tables that comprise the data from that entity. |

1.1 Exporting to SaaS

This approach leverages Microsoft Dataverse direct link to Microsoft Fabric (or “Link to Fabric” for short) to make the Dynamics 365 Commerce data available in Fabric and OneLake where data preparation can happen before exposing that prepared data to Dynamics 365 Customer Insights - Data.

OneLake is the Lake storage layer that comes with Microsoft Fabric and is designed to be the single place for all your analytics data.

All Fabric data items like Data Warehouses and Lakehouses store their data automatically in OneLake in Delta .parquet format.

When using Link to Fabric, selected Dynamics 365 Commerce data gets stored in Delta format in an internal Dataverse Managed Data Lake (DV MDL) and exposed to Fabric as a “Shortcut”, hence considered part of your OneLake. This means it will have an impact on your Dataverse capacity consumption, so it is good practice to monitor it in Power Platform admin center.

1.2 Exporting to PaaS

This approach leverages the Azure Synapse Link for Dataverse with Azure Data Lake framework to make the Dynamics 365 Commerce data available in your Azure Data Lake Storage gen2 (ADLS gen2) Storage Account where data preparation can happen before exposing that prepared data to Dynamics 365 Customer Insights - Data.

Azure Synapse Link for Dataverse is a service designed for enterprise big data analytics, providing scalable high availability together with disaster recovery capabilities.

Azure Synapse Link for Dataverse lets you choose what data from Finance and Operations Apps is to be continuously exported, conforming to the Common Data Model (CDM) framework, where actual data files are stored as partitioned .csv files along with manifest metadata .json files describing the content and location of the actual data files.

| N.B.: as mentioned in the introduction, other variations exist such as Azure Synapse Link for Dataverse with Azure Synapse Workspace to directly export to a Synapse Workspace instead of to an ADLS gen2 Storage Account. While a valid approach, especially if you are already leveraging this pattern for other use cases, we are not recommending it as the main PaaS approach in this article, mainly because exporting to an ADLS gen2 Storage Account instead is a more generic pattern and will give you more flexibility in the tools you can use for data preparation. When selecting Finance and Operations data with Azure Synapse Link for Dataverse with Azure Synapse Workspace, this feature will use your provided Synapse Spark pool to convert the CDM structures (i.e., .csv + .json files ) to Delta .parquet on the fly. Delta .parquet is a good format, providing better performance overall than .csv, and we recommend using it further in this article as it relates to data preparation and integration with CI-D. Though, having access to the .csv files in your ADLS gen2 Storage Account will give you the possibility to use any data transformation tool - including but not limited to Azure Synapse Analytics, such as Azure Data Factory, Databricks, etc. - both to convert to Delta .parquet format and do the actual data preparation, thus potentially mutualizing your integration pipelines and optimizing resource consumption. As a final note, we’ll cover how Customer Insights - Data will read data from Lake once it’s transformed in section 3, but we won’t recommend using the Azure Synapse Analytics data source connector from CI-D as it comes with some limitations, such as lack of support for incremental refreshes or for firewalled Workspaces, so you’ll anyway need an ADLS gen2 Storage Account to expose data to CI-D for best integration. |

2. Preparing Dynamics 365 Commerce data in Lake

No matter if you have opted for a PaaS or SaaS approach, data flowing from Dynamics 365 Commerce to Dynamics 365 Customer Insights - Data will require some specific data transformations to be usable.

Data transformation is an essential and required step because the data models in Dynamics 365 Commerce and how they would be used in Dynamics 365 Customer Insights - Data are very different.

In Dynamics 365 Commerce, data model is optimized for transactional workloads, for instance “Orders” information could be represented in more than one table, related to each other, and each containing a piece of the overall order information.

On the other hand, for Dynamics 365 Customer Insights - Data, data model should be optimized for business consumption and analytical workloads, for instance “Orders” would be expected to be integrated as a single table, denormalized, containing necessary and sufficient information, and ideally with easily understandable column names from a business standpoint.

Please refer to this excellent blog post to understand more about Dynamics 365 Customer Insights - Data best practices for data modelling and data quality.

Fig. 2: high-level data model mapping from Dynamics 365 Commerce to Dynamics 365 Customer Insights - Data.

Master data

Dynamics 365 Commerce master data typically falls into categories such as people, places, and concepts; it includes for example entities such as customers, vendors, and projects.

Customers from Dynamics 365 Commerce will correspond to the “Profile” category for Dynamics 365 Customer Insights - Data and will be the bare minimum master data that you will want to bring in to contribute to Profile Unification.

Other master data can also be brought in depending on your business requirement, but the best practice is to only bring what will support your use cases to avoid over complexifying the CI-D data model and slowing down the system by processing unnecessary data.

Transactional / Behavioral data

Dynamics 365 Commerce orders, payments and online storefront actions will correspond to the “Activity” category for Dynamics 365 Customer Insights - Data.| N.B.: additional master data can fall into two categories as it relates to its data modeling for CI-D. If the same master data is meant to be reusable across several “Profile” or “Activity” tables, bring it as a dedicated “Supporting” table with proper relationships to the corresponding “Profile” or “Activity”. Example: if you have a Product table that includes several attributes (e.g., Product category, Product range, Product color, etc.) that would help aggregate or filter transactions for some measures, and those products would be referenced by several “Activities” (e.g., orders, page visits, etc.) then it makes sense to not denormalized it in each transaction table, but to bring it as a dedicated “Supporting” table. On the other hand, if the master data is only needed once, consider already de-normalizing it into the corresponding “Profile” or “Activity” table during data preparation, a join once upfront early in the integration will be less resource intensive overall than multiple joins, one each time you want to calculate a specific Measure or Segment in Dynamics 365 Customer Insights - Data. Example: if you have only one simple Geography hierarchy (e.g., Country, Region, City) and it is only relevant for the Customers, bring it directly as part of the Customer table contributing to Profile Unification so that it is directly included as part of the Unified Profiles. |

2.1 Number of layers in the Lake

There are two approaches to refine the data model from source (as provided by Dynamics 365 Commerce) to target (as expected by Dynamics 365 Customer Insights - Data)

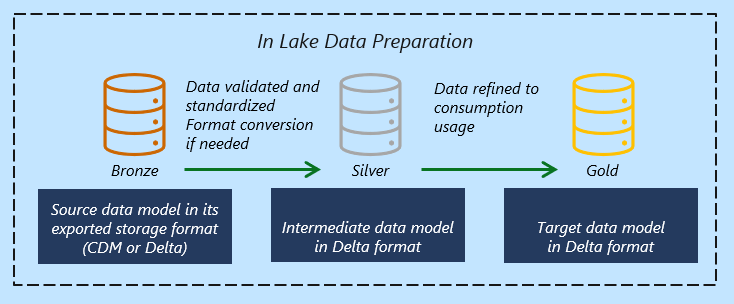

Fig. 3.a: In Lake data preparation – the medallion architecture.

Fig. 3.a: In Lake data preparation – the medallion architecture.

In typical Data Lakes and Lakehouses, the preferred approach is to follow the medallion architecture principle, with 3 layers, from Bronze to Silver to Gold:

- Bronze is the exact copy of the data as in the source (i.e., CDM .csv + .json or Delta exported from Dynamics 365 Commerce depending on the export approach)

- Silver is an intermediary layer where data from all sources are going through similar standardization, normalization and data quality steps (e.g., ensuring that all date/time fields are converted into a similar format, with proper time zone and proper default value, etc.) as well as format conversion (i.e. from CDM .csv + .json to Delta .parquet in our context if needed)

- Gold is the final layer where data has been combined, denormalized, pivoted, etc. and is fit for purpose to a specific consumption scenario (i.e., Dynamics 365 Customer Insights – Data)

This is a recommended approach to mutualize efforts and ensure consistency across your whole data estate in the Lake, so this is the default pattern we recommend, and that you might already be using if you have an existing Lake.

Please read more details on the medallion architecture in the context of Fabric or Databricks as examples.

Fig. 3.b: In Lake data preparation – a simplified integration architecture.

As a fallback, if you do not have an existing Data Lake or Lakehouse medallion architecture in place that you would use to support the data integration between Dynamics 365 Commerce to Dynamics 365 Customer Insights - Data, you could opt for a simplified integration architecture with only 2 layers in your Lake:

- Source layer corresponds to the Bronze layer of the medallion architecture

- Target layer combines the data preparation and transformation tasks from the Silver and Gold layers of the medallion architecture in one step

2.2 Sample Fabric notebook for the SaaS oriented approach

Below is a sample Fabric notebook to prepare the customer and transactions data to be made available to Dynamics 365 Customer Insights - Data. The code is shared under the sample code notice.

The sample notebook combines the data from multiple Dynamics 365 Commerce tables(Customer - DirPartyTable,LogisticsPostalAddress,LogisticsLocation,DirPersonName etc. Transactions - SaleTables,SalesLine etc.) and transforms and filters the data and converts the data to a denormalized structure to be consumed by Dynamics 365 Customer Insights.

3. Reading prepared data from Lake with Dynamics 365 Customer Insights - Data

As mentioned in the previous section, the recommended format for storing data that has been prepared for Dynamics 365 Customer Insights - Data consumption is Delta tables (.parquet files).

Delta is a format that offers best performance overall, and brings ACID (Atomicity, Consistency, Isolation, Durability) transactions to big data workloads, eliminating the legacy requirement of big data architecture that needed to reprocess all data with every job.

Dynamics 365 Customer Insights - Data is evolving to be fully Delta compatible for its internal jobs as per Accelerate time to insights with data in Delta Lake format | Microsoft Learn which means that if your data source is also Delta, and if you are using the appropriate data source connector, you will benefit from its advantage all the way through.

By licensing entitlement, you can refresh your Dynamics 365 Customer Insights - Data instance up to 4 times a day, and even if most batch processing and insights calculation do not require such a frequency, using Delta all the way through will help you get the most out of the platform by reducing the time required for a single end-to-end refresh.

This is why we are strongly recommending to have your prepared data in your Data Lake or Lakehouse in this format and to use the corresponding Dynamics 365 Customer Insights - Data Connect to Delta tables data source connector.

With this pattern, Dynamics 365 Customer Insights - Data does not move or copy the data source data but simply reads it from the Lake (i.e., Gold layer) when one of its jobs needs it.

| N.B.: If you have opted for a SaaS approach with Fabric and OneLake for data preparation, please note that Dynamics 365 Customer Insights - Data does not support this direct connection as of today. You can create a PaaS ADLS gen2 Storage Account, linked to your Fabric workspace as a shortcut, that will serve the purpose of the Gold layer to expose data to Dynamics 365 Customer Insights - Data and that will be read with the same recommended CI-D data source connector (i.e. Connect to Delta tables). We are NOT recommending using one of the Power Query connectors to ingest data from Fabric through its SQL endpoint as this will imply extra data movement, latency, and will break the Delta capabilities of Dynamics 365 Customer Insights - Data |

4. Customers, Unified Customer Profiles and Contacts

As such, it is important to understand and clarify how this consumer is represented and will flow between the Apps.

Each App has its distinct definition and structure to support this:

- In Dynamics 365 Commerce, Customer profile is a single functional entity and normalized into several tables.

- In Dynamics 365 Customer Insights - Data, the Customer Profile is the table in which CI-D reconcile the different demographic data points from the different source systems through the Customer Profile Unification process, and is connected to the associated transactional or behavioral Activities to help generate Insights.

- In Dynamics 365 Customer Insights - Journeys, the Contact table in Dataverse is the natural starting point to build either Segment-based or Trigger-based Journeys to reach out to your consumers. The Contact table is also widely used in other Dynamics 365 Customer Engagement Apps such as Dynamics 365 Sales, etc.

Fig. 4: Architecture for Customers, Unified Customer Profiles and Contacts integration

4.1 Between Dynamics 365 Commerce and Dynamics 365 Customer Insights - Data

This path of the integration corresponds to the approach that was described in sections 1, 2 and 3 of the present article for all Dynamics 365 Commerce data, but here zoomed in specifically to just the Customer entity from Dynamics 365 Commerce.

It is important to ensure that all relevant information from the Customer entity in Dynamics 365 Commerce can flow to Dynamics 365 Customer Insights - Data with full control on data preparation and data quality if the synchronization mechanism that is described in following sub-section 4.2. is not enough.

4.2 Between Dynamics 365 Commerce and Dataverse

This path of the integration is critical and mandatory to ensure that the Dataverse Contact table is populated and can be used by Dynamics 365 Customer Insights - Journeys (and other Dynamics 365 Customer Engagement Apps).

Though, it is not necessarily sufficient for Dynamics 365 Customer Insights - Data, as the recommended approach is to use Dual-write which will not necessarily map all information between the Dynamics 365 Commerce Customer entity and the Dataverse Contact table. Hence why we are still recommending also using in parallel the method described in previous sub-section 4.1.

Dual-write is an out-of-box infrastructure that provides near-real-time integration between customer engagement apps and finance and operations apps.

Dual-write provides tightly coupled, bidirectional integration between finance and operations apps and Dataverse. Any data change in finance and operations apps causes writes to Dataverse, and any data change in Dataverse causes writes to finance and operations apps. This automated data flow provides an integrated user experience across the apps.

In our context, we recommend using the Integrated customer master with the Customers V3 (contacts) mapping template.

| N.B.: When enabling data entities for Dual-write, always test in a sandbox to ensure that performance and business continuity support your heaviest workloads. There are some known limitations using Dual-write, and some constraints when it comes to initial synchronization. If you do not want to use Dual-write then you should design your own custom integration from commerce to Dataverse contact entity. |

4.3 Between Dataverse and Dynamics 365 Customer Insights - Data

- Some relevant Contact information and attributes could be maintained and live in parallel in the Dataverse Contact table while not being mapped and synchronized back to the Dynamics 365 Commerce Customer entity as part of the Dual-write setup. But we would still want that information that only exists in the Contact table to be available as well in Dynamics 365 Customer Insights - Data

- It is a pre-requisite to achieve the “closed loop” between all structures in the 3 Apps, namely between the Dynamics 365 Customer Insights - Data Customer Profile table and the Dataverse Contact table used by Dynamics 365 Customer Insights - Journeys. This last aspect will be described in subsequent sub-section 4.4

| N.B.: As of today, this connector does not offer yet fine control over data selection and brings the table “as they are.” This will evolve in the coming weeks to offer more flexibility and even better performance end-to-end by embracing Delta format behind the scenes as documented in this release plan article |

4.4 Between Dynamics 365 Customer Insights - Data and Dynamics 365 Customer Insights - Journeys

This path of the integration is automatic and does not require any specific configuration if its pre-requisites are respected.

Having this integration between Customer Profiles from Dynamics 365 Customer Insights - Data and Contacts in Dataverse will unlock many scenarios by ensuring that Dynamics 365 Customer Insights - Journeys can operate directly on Contacts, enriched with extra attributes and insights from Dynamics 365 Customer Insights - Data Customer Profiles, be it for segment creation, email personalization or journey branching.

CustomerId backstamping is the name of this automatic process that will ensure that each Contact in Dataverse has a relationship back to its corresponding Customer Profile. CustomerId being the name of the Primary Key generated by Dynamics 365 Customer Insights - Data to uniquely identify a Customer Profile.

The two pre-requisites are that the Contact table:

- is ingested in Dynamics 365 Customer Insights - Data with one of the supported data source connectors as described in precedent sub-section 4.3

- run through the Profile Unification with its Primary Key (ContactId) properly setup

| N.B.1.: While the Contact table from Dataverse could also be ingested in ynamics 365 Customer Insights - Data through the corresponding power query connector, and preserve the CustomerId backstamping process, we do not recommend this approach as it will not scale and perform as well as using the Microsoft Dataverse data source connector. It would also break the capability for CI-D profile Unification to leverage the Delta format capabilities for improved performances. We could only recommend this approach through Power Query if your Contact table volume is not too high (e.g., < 5 million records), that you do not have to use complex transformation or joins in your Power Query dataflow, and that you do need Optionset labels and not just Optionset key values. N.B.2.: Note that Dynamics 365 Customer Insights - Journeys can also operate on segments of Customer Profiles created and shared from Dynamics 365 Customer Insights - Data, but it’s not the most flexible path and should be recommended mostly for scenarios where some CI-D Customer Profiles are created from an external source system without a matching Contact in Dataverse. |

- Recap

Fig. 5.a: SaaS oriented end-to-end data integration architecture

Fig. 5.b: PaaS oriented end-to-end data integration architecture

Blog post series

- Better Together: Dynamics 365 Commerce & Customer Insights-Better Together: Dynamics 365 Commerce & Customer Insights

- Data Integration from D365 Commerce to Dynamics 365 Customer Insights- Data. This blog post / article

- Dynamics 365 Commerce and Dynamics 365 Customer insights - near real time scenarios – coming soon.

Like

Like Report

Report

*This post is locked for comments