Hi HimaM,

Since 35000 records are too many, you have to take another solution, which is to reduce the scope of duplicate detection.

Detect less than 5000 records at a time and delete them, execute them in batches.

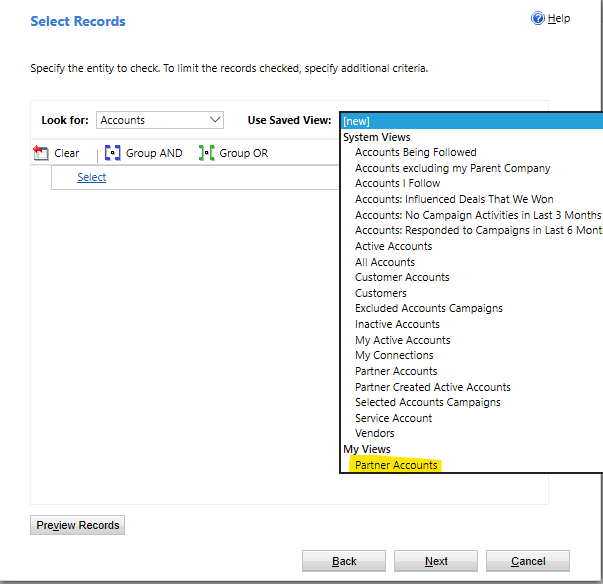

1. First, create a personal view of the entity that need to delete duplicate records, set the filter of the view a liiter more strictly, that is you need to control the number of records in this view to be around 5000 or slightly more than 5000.

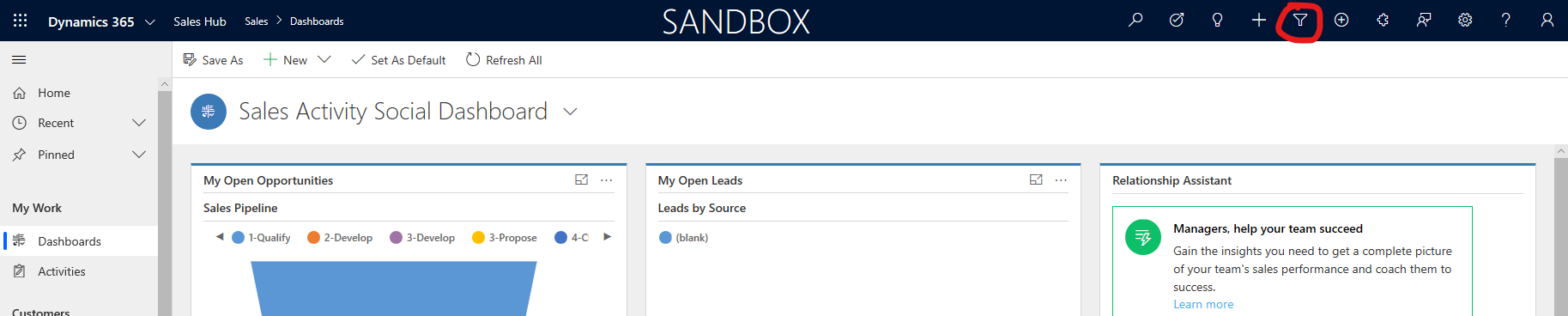

Use Advanced Find to quickly create personal views.

Then, repeatedly create personal views, the filtering of each personal view should be different, and control the number of records in this view to be around 5000, until all personal views contain all records of the entity.

In this case, we divide the huge number records in one view into the appropriate number of records in multipe personal views.

Then run bulk system jobs to detect duplicate records on these personal views.

In this way, the scope of detect duplication is reduced to a single personal view and the detection result must be less than 5000 records, so they will be successfully deleted.